Resources and insights

Our Blog

Explore insights and practical tips on mastering Databricks Data Intelligence Platform and the full spectrum of today's modern data ecosystem.

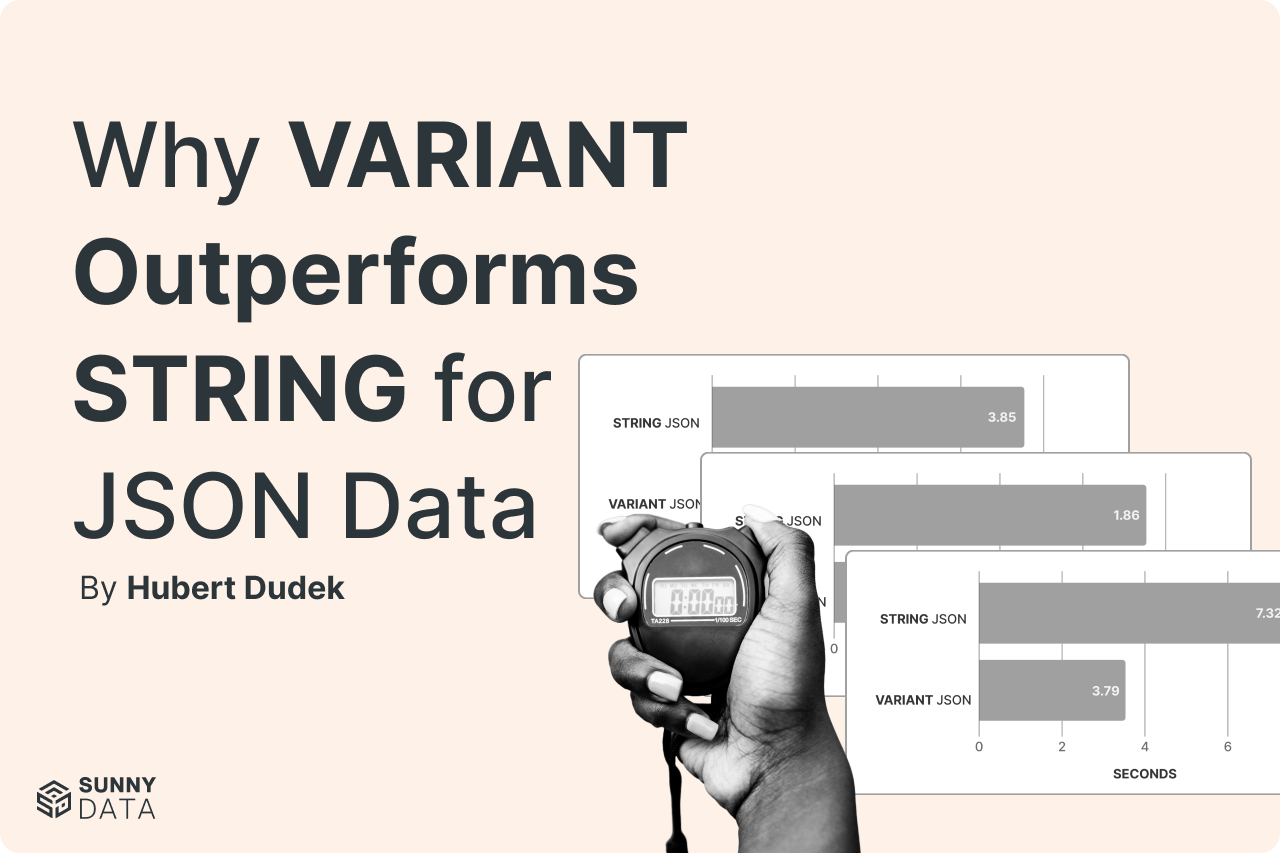

Why VARIANT Outperforms STRING for JSON Data

Check out how Databricks VARIANT outperforms STRING for JSON data with 22% better storage and up to 50% faster queries.

The Hidden Benefits of Databricks' Managed Tables

Learn why Databricks managed tables outperform external tables. Real testing shows cost savings through optimized caching and reduced storage operations.

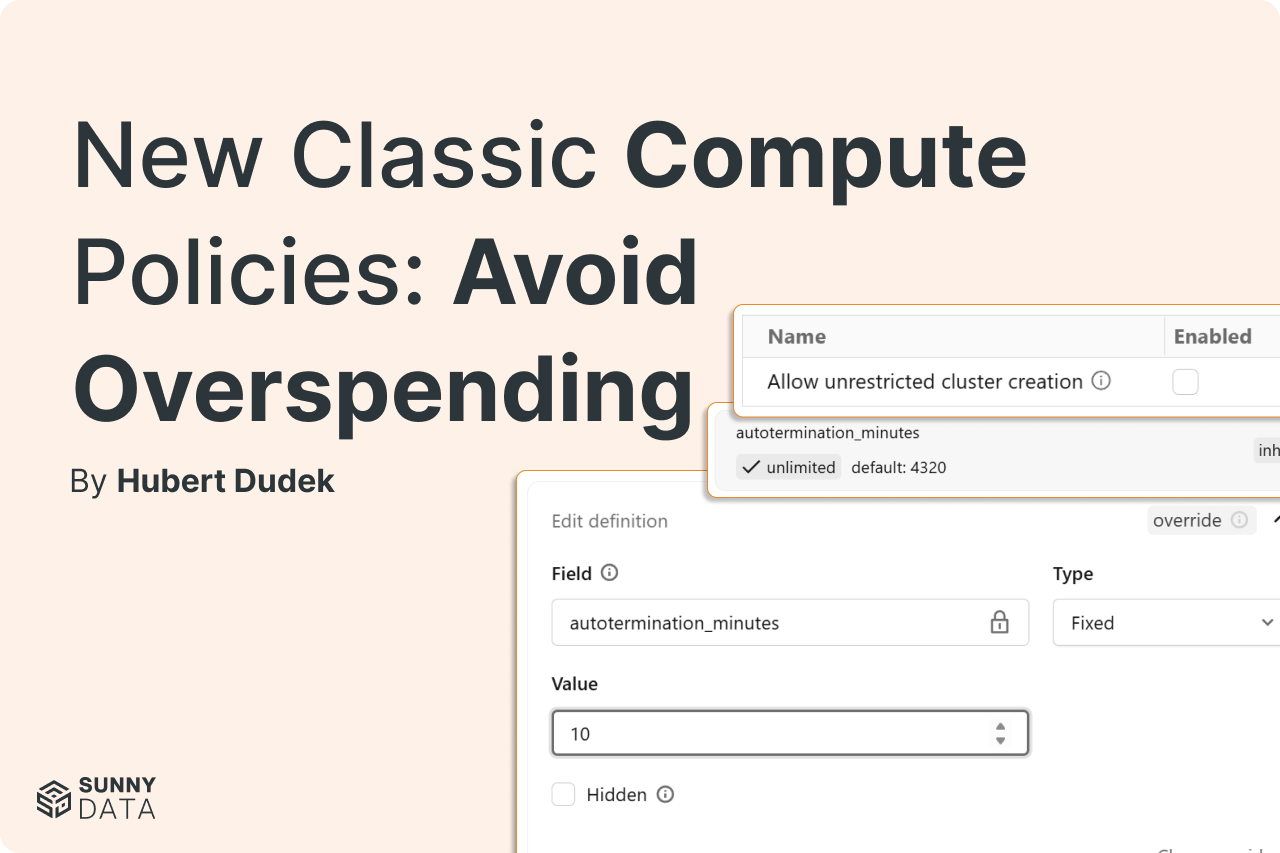

New Classic Compute Policies: Prevent Overspending

Configure Databricks compute policies to prevent accidental overspending. Step-by-step guide to setting auto-termination, worker limits, and access controls that protect your budget without limiting productivity.

Why Financial Institutions Are Ditching Vendor Solutions for Databricks

How 7 Databricks accelerators are helping banks and financial institutions replace expensive vendor solutions with custom in-house data applications for AML, fraud detection, customer analytics, and compliance.

CI/CD Best Practices: Passing tests isn't enough

CI/CD pipelines can pass all jobs yet still deploy broken functionality. This blog covers smoke testing, regression testing, and critical validation strategies: especially useful for data projects where data quality is as important as code quality.

The Hidden Benefits of Databricks Serverless

Most Databricks cost comparisons focus only on compute pricing, missing two critical factors that can save thousands monthly. Learn how serverless waives private link transfer fees (up to $10/TB) and provides persistent remote caching that survives warehouse restarts - hidden benefits that often justify the serverless premium entirely.

The Chaos of Data: How Fragmentation is Stalling Innovation

Discover how data fragmentation across multiple platforms creates costly silos and slows decision-making. Learn why Databricks offers the unified solution modern enterprises need.

Cost allocation, cloud tags, and other relevant things on Databricks

In this three-part video series, Databricks MVP Josue Bogran and Greg Kroleski from Databricks’ “Money Team” discuss cost allocation, tagging best practices, cloud innovations, and performance enhancements. The series explores Databricks' efforts to optimize cost reporting, leverage AI for insights, and empower businesses to make smarter, growth-driven decisions in 2024 and beyond.

5 Reasons Why We Recommend Databricks

Discover why Databricks stands out as the leading data platform in this insightful blog. From unified data management to cost efficiency, unmatched performance, and robust analytics, Josue Bogran explains the top 5 reasons Databricks excels in the competitive landscape. Learn how Databricks balances innovation, user-centric design, and industry versatility to deliver exceptional results.

Performance, Benchmarks, and Optimization Tips for Databricks Users

Josue Bogran interviews Jeremy Lewallen from Databricks’ Performance Team, exploring benchmarks, storage cost optimization, rightsizing SQL Serverless Compute, and common compute mistakes. Discover why Databricks continually enhances performance, tips for using the latest DBRs, and how their innovations provide a fast, efficient, and developer-friendly data platform.

Databricks Myths vs. My Own Personal Experience

Transitioning to Databricks reduced costs by $19K/month and streamlined data operations. Learn how Databricks' unified platform simplifies data engineering and boosts efficiency in our latest blog.

Evaluating Databricks' Cost Control Features: A Closer Look at Budgets and Cost Dashboard

Evaluating Databricks' cost control features reveals strengths in granular tracking and user-friendly budgeting, but highlights areas for improvement in automated alerts and comprehensive expense tracking. Explore our insights on Databricks' budgeting tools.

Cost Saving Best Practices For Databricks Workflows

Discover how to manage pipeline costs effectively with Databricks Workflows. This article offers practical tips to reduce total cost of ownership without sacrificing performance, and provides insights into understanding your costs better. Learn strategies like using job compute and spot instances, setting warnings and timeouts, leveraging task dependencies, and implementing autoscaling and tagging. Optimize your resource usage and get the most out of your Databricks environment. Read on for actionable advice to streamline your data processes.

A Roadmap to a Successful AI Project: Planning, Execution & ROI

Disappointed by the high failure rate of AI initiatives? You're not alone. This post unveils the secrets to planning and executing a successful AI project. We detail our proven methodology for ensuring AI success, focusing on identifying high-ROI use cases and navigating the Proof-of-Concept stage effectively. Learn how to choose the right supplier, avoid common pitfalls, and ensure your AI project transitions smoothly from pilot to production, delivering real business results and a competitive edge.

Databricks Model Serving for end-to-end AI life-cycle management

In the evolving world of AI and ML, businesses demand efficient, secure ways to deploy and manage AI models. Databricks Model Serving offers a unified solution, enhancing security and streamlining integration. This platform ensures low-latency, scalable model deployment via a REST API, perfectly suited for web and client applications. It smartly scales to demand, using serverless computing to cut costs and improve response times, providing an effective, economical framework for enterprises navigating the complexities of AI model management.