Purpose for your All-Purpose Cluster

What if I told you that you could configure your all-purpose clusters to reject scheduled jobs entirely? This might sound counterintuitive, after all, aren't they called "all-purpose" for a reason?

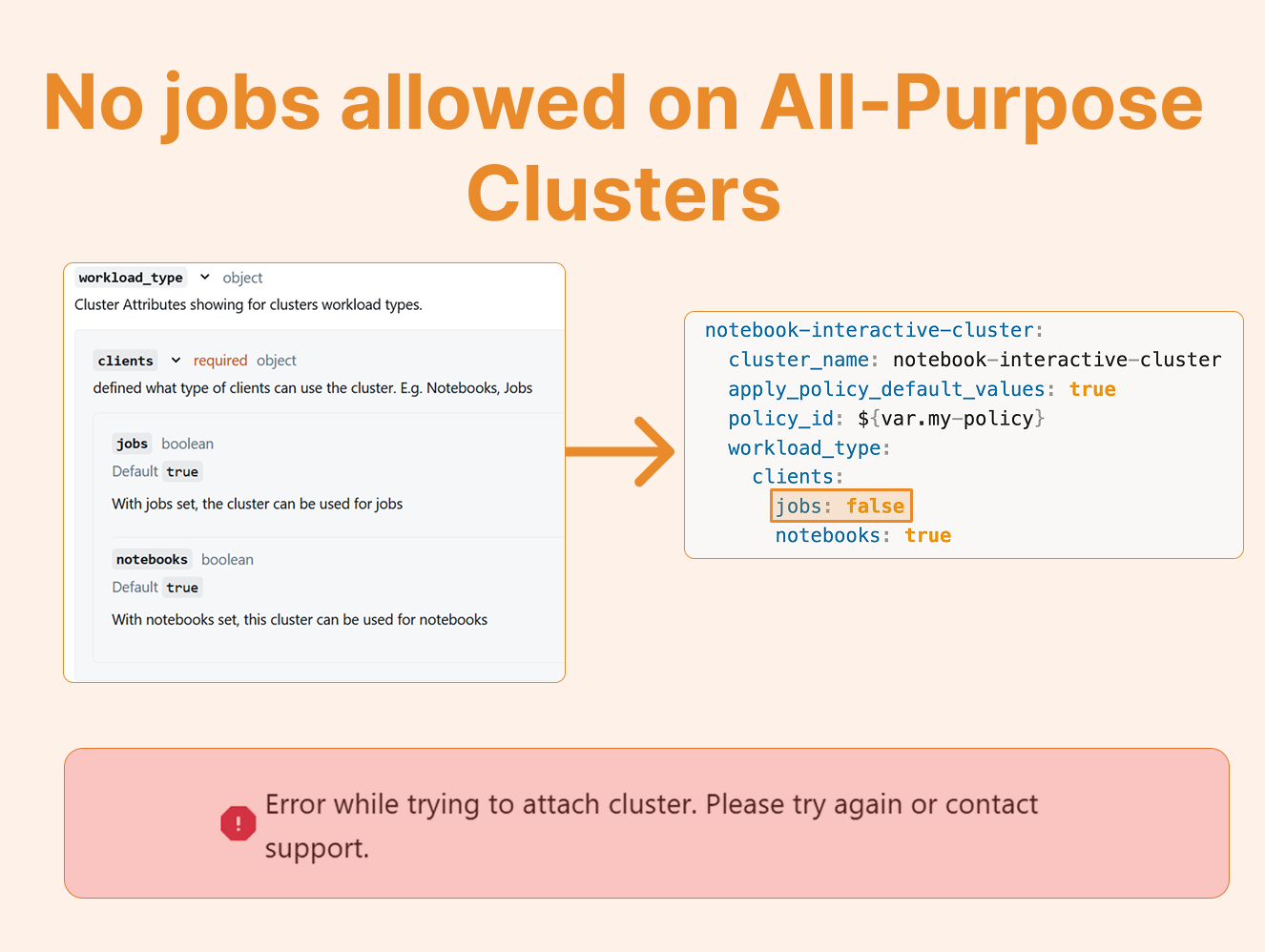

Recently, I discovered a hidden configuration option that lets you restrict all-purpose clusters to ONLY run notebooks, effectively blocking any scheduled jobs from using this expensive compute. This simple setting can save organizations significant money by forcing teams to use cost-effective job clusters for their scheduled workloads. Here's how it works and why you should care.

The Discovery

While setting up an interactive cluster, I stumbled upon the workload type configuration option:

This prompted me to experiment with creating two distinct interactive clusters using Databricks Asset Bundles (DABS): one dedicated to jobs and another for notebooks. Here's how I configured two separate clusters with different workload types:

resources:

clusters:

job-interactive-cluster:

cluster_name: job-interactive-cluster

apply_policy_default_values: true

policy_id: ${var.my-policy}

workload_type:

clients:

jobs: true

notebooks: false

notebook-interactive-cluster:

cluster_name: notebook-interactive-cluster

apply_policy_default_values: true

policy_id: ${var.my-policy}

workload_type:

clients:

jobs: false

notebooks: trueAfter deploying these configurations, both clusters appear in the compute list as expected. However, the workload type setting creates an important distinction:

When attaching a notebook to a cluster, only the notebook-interactive-cluster appears in the selection list. The job-only cluster is hidden from this view.

Similarly, when scheduling the same notebook as a job, only the job-interactive-cluster is available for selection.

If you attempt to run a notebook on a jobs-only cluster (or vice versa), Databricks will throw an error, preventing the misuse of compute resources.

Advantages and Disadvantages

Job-Interactive-Cluster (jobs: true, notebooks: false)

Key Points:

Still billed as all-purpose compute despite the restriction

No cost savings compared to standard all-purpose clusters

Can be useful in specific production scenarios

When to Use: While it's possible to run production jobs on interactive clusters, I generally recommend exploring alternative architectures such as:

Job-specific clusters (which terminate after job completion)

Instance pools for faster startup times

Notebook-Interactive-Cluster (jobs: false, notebooks: true)

Key Points:

Enforces best practices by preventing job execution on all-purpose compute

Helps control costs by ensuring jobs use appropriate compute types

Maintains clear separation between development and production workloads

This configuration is particularly valuable because it prevents accidental costs (teams can't inadvertently run scheduled jobs on expensive all-purpose compute), enforces governance (creates a boundary between interactive dev and production workloads), and simplifies cluster selection (devs. see only relevant clusters for their current task).

Conclusion

The workload type feature in Databricks might seem minor, but it's a powerful tool for implementing compute governance and cost control. By explicitly defining what each cluster can be used for, you create natural boundaries that guide users toward appropriate compute choices. For organizations looking to optimize their Databricks costs while maintaining development flexibility, implementing workload-type restrictions on interactive clusters is a simple yet effective strategy.